Once upon a time, visual inspection of subsea infrastructure meant divers taking still cameras down to the seafloor, hoping that the camera casing wouldn’t flood, or that the film wouldn’t be over exposed or destroyed during development. Even using the first remotely operated vehicles (ROV) with a live video feed, it was difficult to catch a long enough sight of an inspection target, due to limited control and positioning functionality if there was any level of current.

It’s safe to say, the industry has come a long way since and the imaging possibilities are expanding by the day. In fact, the problem is now starting to become how to process the “tsunami” of data that’s being gathered.

These issues were top of the agenda at a joint seminar held by The Hydrographic Society in Scotland (THSiS), the International Marine Contractors Association (IMCA) and the Society of Underwater Technology (SUT) in Aberdeen in October.

On the one hand, sensor platforms are evolving. Both ROVs and autonomous underwater vehicles (AUV) are becoming faster to use; BP has a target to have 100% of subsea inspection performed via marine autonomous systems (MAS) by 2025, Peter Collinson, senior subsea and environmental specialist at BP at the oil major, told the seminar.

They’re also becoming more autonomous, supported by onshore operations centers. Equinor has already trialed piloting ROV operations from onshore centers (via Oceaneering and IKM) and this year it’s set to trial Eelume’s snake-like subsea robot in a subsea garage at the Åsgard field offshore Norway, on a tether. By 2020, it hopes to go tetherless, Richard Mills, director of sales, Kongsberg Maritime Robotics, told the seminar. The next step could be use in combination with unmanned surface vessels.

Meanwhile, imaging technologies, from laser to photogrammetry, are helping these platforms to gather more data, faster and, potentially, also help them navigate.

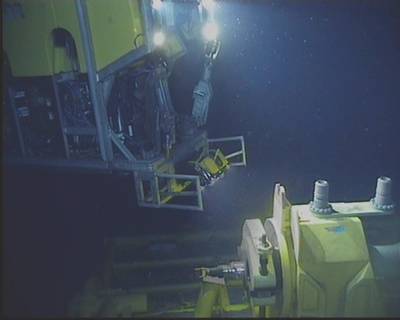

Subsea survey operations using an ORUS3D system. (Source: Comex Innovation)

Subsea survey operations using an ORUS3D system. (Source: Comex Innovation)

Pretty – measurable – pictures

On the fly surveys are being done using photogrammetry. In summer 2018, Comex Innovation completed two North Sea offshore inspection projects using its ORUS3D underwater photogrammetry technology, having previously done projects in West Africa, Raymond Ruth, U.K. and North Sea Agent for Comex Innovation, said. Both operations, in the U.K. and Danish sectors of the North Sea, called for high accuracy measurement, one in support of brownfield modifications.

The ORUS3D subsea optical system measures and then creates high-resolution 3D models of subsea structures. Each system comprises an integrated beam of tri-focal sensors, with four wide beam LED flash units, plus a data acquisition and processing unit. It uses triangulation of features within the images captured to localize its relative position and build a 3D point cloud reconstruction that can be used for measurement, so that an inertial navigation system or target placement on the object for scaling are not needed when on site.

The integrated unit fits on to an ROV for free-flying data acquisition from more than 40cm away from structures, although the best distance is between 1-2m from the object. This takes no longer than a general video survey, Ruth said.

Initial onboard real-time processing is carried out to assess location and quality, before onsite (on the support vessel) processing, to further quality check the data and create an initial scaled 3D model to cm accuracy. Final processing of the data, which is collected as point cloud data, is then carried out to reconstruct the site or object in a 3D model to mm accuracy.

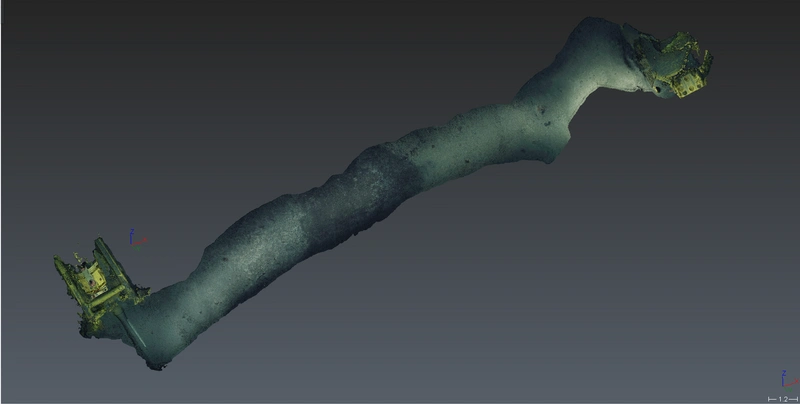

Results from an ORUS3D survey (Source: Comex Innovation)

Results from an ORUS3D survey (Source: Comex Innovation)

Automated eventing

EIVA has been working on the use of machine learning and computer vision techniques to detect objects such as anodes on pipe, pipe damage and marine growth automatically, using a conventional camera, said Matthew Brannan, senior surveyor, EIVA. To use machine learning means having to train a system with tens of thousands of images from pipelines. This is what EIVA has been doing, and it’s bearing fruit. The company has run trials on existing data sets that were evented the traditional way, so it was possible to compare the automated results with the manmade eventing. Late 2018, it also started live testing during ROV-based operations. The ultimate aim for the technology is for automatic event recognition during an AUV survey, enabling an AUV to spot something and then send a message to a surface vessel, Brannan said.

EIVA is also taking conventional cameras further, using simultaneous location and mapping (SLAM) and photogrammetry to do map areas, while being able to locate the camera’s position relative to what it is mapping. Existing SLAM systems rely on loop closure, and photogrammetry solutions need a lot of image overlap and good visibility, and are usually not real-time, Brannan explained. Some also rely on costly stereo cameras, which need calibration and take up space, he said.

EIVA calls its system VSLAM, or visual SLAM. By creating a sparse point cloud on the fly, VSLAM can locate itself (i.e. the vehicle it is on) in its environment and use the model it’s creating to automatically track and scan subsea structures. This is possible with a single camera, Brannan said, and from still images or images extracted from video, by tracking points in each image, and estimating a track, using those points, to build the sparse point cloud, creating a digital terrain model. An AUV would also know its original absolute position and could then use waypoints (i.e. landmarks) along the route.

The point cloud can then be used to create a dense 3D point cloud and then a mesh, with color and texture added. EIVA has had a team working on this since 2017 and is now testing the system on AUVs. This year, it will be running live projects, with visual navigation, Brannan said, and following that it wants to aid autonomous inspection and light intervention.

subSLAM

Rovco has a vision to deploy an AUV with an autonomous surface vehicle (ASV) to perform surveys and mapping with its SubSLAM live 3D image and mapping technology. SubSLAM allows an ROV to build a 3D map of its environment on the fly, without using other inertial navigation or positioning systems. The firm calls it live 3D computer vision.

Rovco’s live SubSLAM concept (Source: Rovco)

Rovco’s live SubSLAM concept (Source: Rovco)

Rovco’s SubSLAM X1 Smart Camera technology uses a dual camera system to create a live point cloud of what it sees. This is then used to calculate the vehicle’s position relative to what it’s looking at.

Rovco has been using SubSLAM on a Sub-Atlantic Mojave observation ROV, but is making it compatible with other platforms, said Joe Tidball. The firm plans to acquire its first AUV, a Sabertooth, from Saab Seaeye, this year, integrating SubSLAM in 2020, and then building artificial intelligence (AI) into the system in 2021. It’s then looking to deliver surveys from an ASV from 2022.

Tidball said the system is suitable for subsea metrology and could be used with a tetherless vehicle using acoustic communications, linked to a surface communications gateway with radio/cellular or satellite networks and then to the cloud, where engineers could access a browser-based measurement tool – being fed with live 3D data. With AI, the vehicle could then make assessments itself.

Rovco tested its SubSLAM system at the Offshore Renewable Energy Catapult in Blyth, northeast England, in August 2018. Tidball said the company trialed the measurement accuracy it could achieve using terrestrial survey data on structures in a dry dock, which was then flooded so SubSLAM could do its work in 1.2m visibility. The underwater data was compared against a laser scan. Compared with the two hours taken to do an open-air survey, with 1.7mm alignment error, SubSLAM achieved 0.67mm error, from a two-minute scan, Tidball said.

Tidball said the system could negate the need for long baseline (LBL) systems, for positioning accuracy, but said visibility was a factor, for the cameras to work. While the technology is able to position the ROV or AUV within its environment, if it was moving to another site, and was tetherless, inertial navigation would be needed.

Back to the future

The techniques used to create point clouds on the fly can also be used to create point clouds from existing images or video footage, said Dr. Martin Sayer, managing director at Scotland-based Tritonia Scientific. As an example, Tritonia used its technology as part of a net environmental benefit analysis of a platform jacket in a tropical location, where an operator wanted to determine how much extra weight marine growth would add to a jacket, for lifting operation calculations and onshore disposal planning. Tritonia was given existing ROV footage to assess. This had been taken for fish life surveys, not jacket biofouling, so it was not designed or intended for 3D modeling. Two HD cameras and a standard camera had been mounted on the ROV, left, right and central.

Due to light in the water and most of the footage being directed at fish, about 95% of it wasn’t useable. The rest was shot at night, making it more suitable, with no surface interference and better contrast, enabling a full near complete section of leg to be modeled. By removing the known leg volume from the model, the marine growth volume coulld be calculated.

On the fly

For some, the real potential of all of this technology is being able to process data live and using that information to feed back in live (albeit supervised) autonomous systems.

There’s a feedback loop that will make these operations more powerful. “Processing the data [that we collect] automatically is when we get value,” said Nazli Deniz Sevinc, uROV project lead, OneSubsea. “Plus, [it’s] a feedback loop into supervised autonomy algorithms and feature detection,” such as Brannan discussed.

A lot is happening. Supervised autonomy, autonomous fault detection, unmanned operations, without the need for support vessels, are the goal. There are hurdles, such as legislation, which in the area of unmanned vessel systems is behind the technology that’s available. There are issues with data standards and how to handle the amount of data now being created, and not least adapting these techniques into today’s workflows (or adapting the workflows themselves). It’s a fast-moving space to watch with a huge amount of blurring boundaries (if not images).

Data Tsunami

New imaging technologies are creating new opportunities for subsea visualization and autonomy. They’re also creating a “data tsunami” challenge for operators. Peter Collinson, from BP, said, “One of the biggest concerns is (that) when you start to send fleets of AUVs out there, we will have a tsunami of data coming at us. We have been focused on [sensor/survey] platforms, because we are still building trust in what these systems are and what they can do. The data piece is coming … dealing with that data in a timely fashion. How do we form a digital twin and get through to predictive, time series automated change detection?”

While data gathering evolves rapidly, future focus is on data delivery to the people who need it, such as pipeline engineers, in a format that is meaningful and useful. Artificial intelligence (AI) will help, said Malcolm Gauld, from Fugro, through using cloud computing and automatically detecting anomalies or defects. But it will take time to develop systems, he said. Fugro is working on this and has been performing trials in Perth, which have helped raise issues with the AI, such as differentiating a silvery pipeline coating from a shark. Future steps include building autonomy into that AI. But, Gauld suggested that new models should also be looked at. Could pipelines be built with sensors, making it ‘smart’ from the start with all maintenance predictive, he asked. “In the future it will not be about what equipment you use to get that data, but what you get out of that data.”

In fact, in the future, we will not even be looking at this data, suggested Joe Tidball, senior surveyor at Rovco. Artificial intelligence, robotics, will do the interpretation and decision making. “I don’t think that in 10 years we will be looking at video anymore. We will simply get reports emailed from robots in the North Sea saying you need to look at XYZ.”

Fast Pipeline Inspection

For pipeline surveys, BP has been focusing on doing things faster. In 2017, BP contracted DeepOcean, using a “Fast ROV” (pictured above, Superior ROV from Norway’s Kyst Design) to survey 478km of pipeline (map below), between the Clair and Magnus facilities in the North Sea to Sullom Voe Terminal, onshore Shetland, and from the Brent and Ninian facilities to Magnus, all in just under four days. The survey included laser, HD stills cameras and Force Technology field gradient cathodic protection survey (CP) systems (FIGS), and averaged 5.1kt/hour inspection time, 6x faster than a standard ROV and 5x faster than a modified standard ROV, said Collinson. It also included side scan and multibeam survey data. The end result was a 3D scene layer file, a 2D georeferenced mosaic and event/anomaly listings.

A Kyst design Superior ROV being deployed for BP’s pipeline survey by DeepOcean (Source: BP)

A Kyst design Superior ROV being deployed for BP’s pipeline survey by DeepOcean (Source: BP)

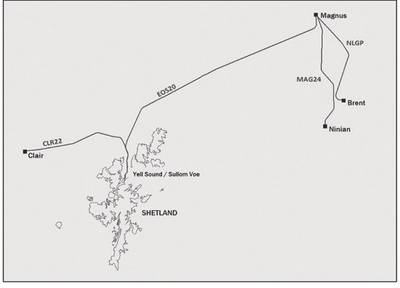

The pipeline system surveyed over four days (Source: BP)

The pipeline system surveyed over four days (Source: BP)

A section of pipeline imaged on the survey (Source: BP)

A section of pipeline imaged on the survey (Source: BP)

Stretching acoustics

Schlumberger company OneSubsea is working on a project called uROV. Its goal is an untethered resident subsea vehicle, with supervised autonomy, which means being able to communicate via video with the vehicle from shore. But, while the available of 4G cellular networks offshore is opening up through-air video communication, through water video links are not so easy, Nazli Deniz Sevinc, uROV project lead, OneSubsea, told the Society of Underwater Technology (SUT), international Marine Contractors Association (IMCA) and the Hydrographic Society in Scotland (THSiS) joint seminar in October.

OneSubsea’s uROV program plans to use a Saab Seaeye Sabertooth vehicle, mounted with Schlumberger technologies. The uROV vision is somewhere between an AUV and a manually operated ROV, offering untethered but supervised autonomy, Sevinc said. This would involve having a “human in the loop”, while it works at distances of up to 3km from a surface communications gateway, eg. an unmanned surface. When nearby subsea infrastructure, it would also have an up to 200m through-water data link.

This would enable access to subsea vehicles 24/7. But, it means needing subsea wi-fi, advanced sensing, visual sensing, advanced control and automatic analytics. Sevinc said the uROV would use EIVA Navisuite, for mission planning, and an autonomy layer developed for the Sabertooth. But, this relies on real-time feedback, which relies on communications.

For brownfield operations, uROV has been targeting acoustic communications, which inherently means a low data rate. OneSubsea, via its owner Schlumberger’s Boston base, has been working on how much data it can squeeze through 100kpbs. In 2017, Sevinc said the firm achieved boat-to-boat video transfer through an acoustic channel at 100kbps over 1km. It then achieved similar results with a vertical transfer. Sevinc said this is an upper limit for video, with remaining bandwidth being used for commands. Throughout 2018, this capability was being integrated on to the uROV, with plans to develop capability to transfer video data through 3km this year and actual deployments by the end of the year. Other kit being integrated onto the uROV includes LiDAR (light detection and ranging) laser technology from 3D at Depth.